Arabic - Wikilangs Models

Comprehensive Research Report & Full Ablation Study

This repository contains NLP models trained and evaluated by Wikilangs, specifically on Arabic Wikipedia data. We analyze tokenizers, n-gram models, Markov chains, vocabulary statistics, and word embeddings.

📋 Repository Contents

Models & Assets

- Tokenizers (8k, 16k, 32k, 64k)

- N-gram models (2, 3, 4, 5-gram)

- Markov chains (context of 1, 2, 3, 4 and 5)

- Subword N-gram and Markov chains

- Embeddings in various sizes and dimensions (aligned and unaligned)

- Language Vocabulary

- Language Statistics

Analysis and Evaluation

- 1. Tokenizer Evaluation

- 2. N-gram Model Evaluation

- 3. Markov Chain Evaluation

- 4. Vocabulary Analysis

- 5. Word Embeddings Evaluation

- 6. Morphological Analysis (Experimental)

- 7. Summary & Recommendations

- Metrics Glossary

- Visualizations Index

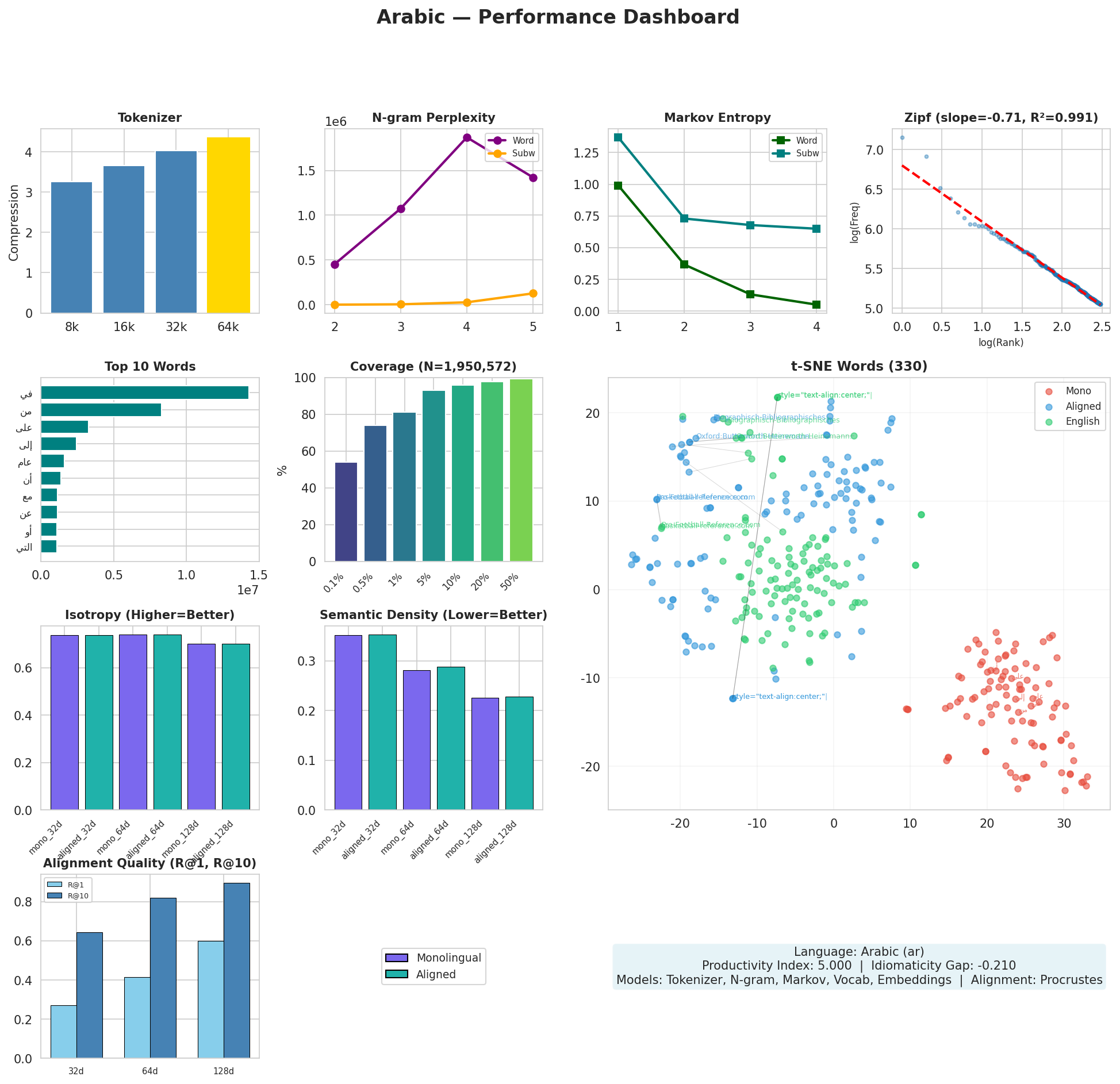

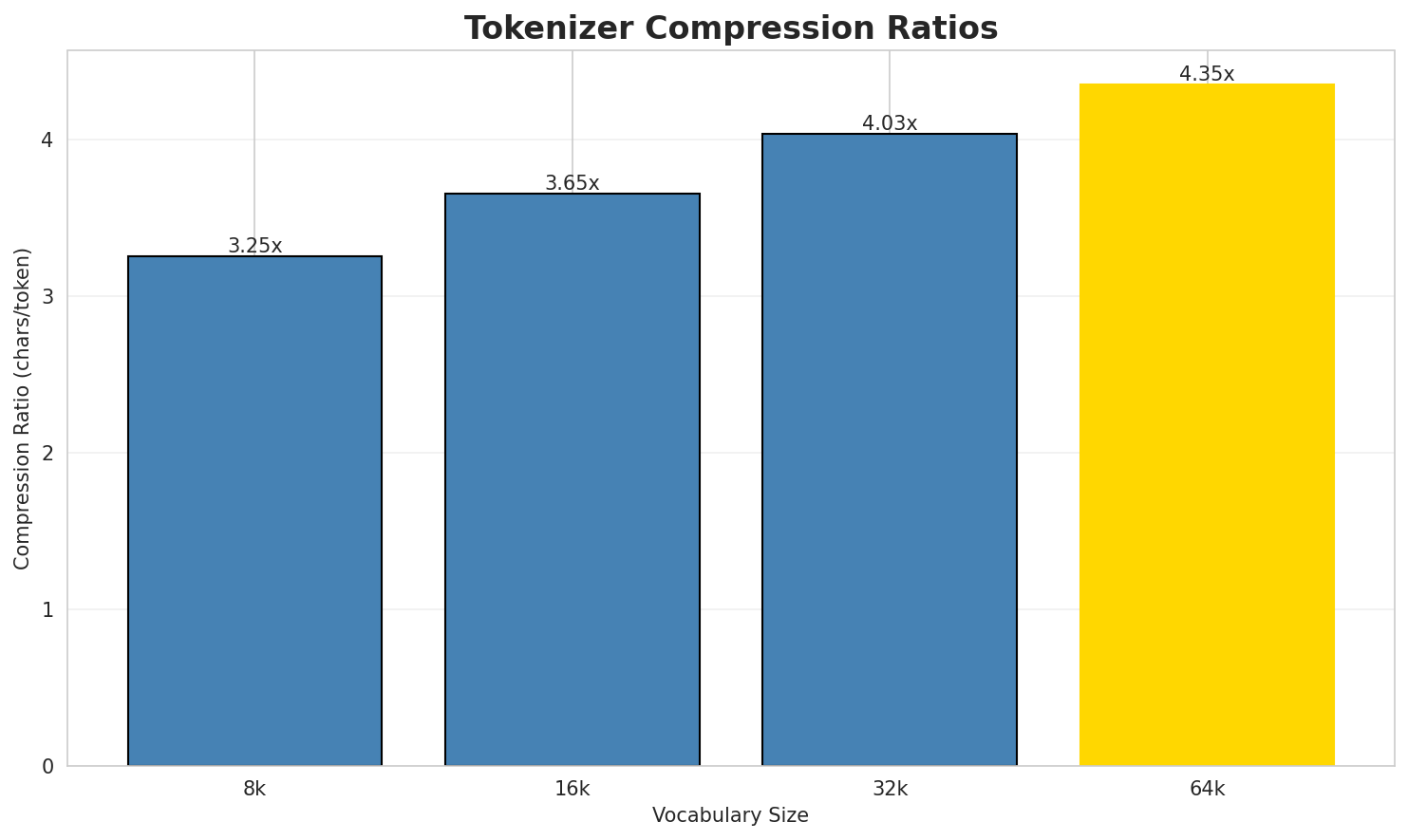

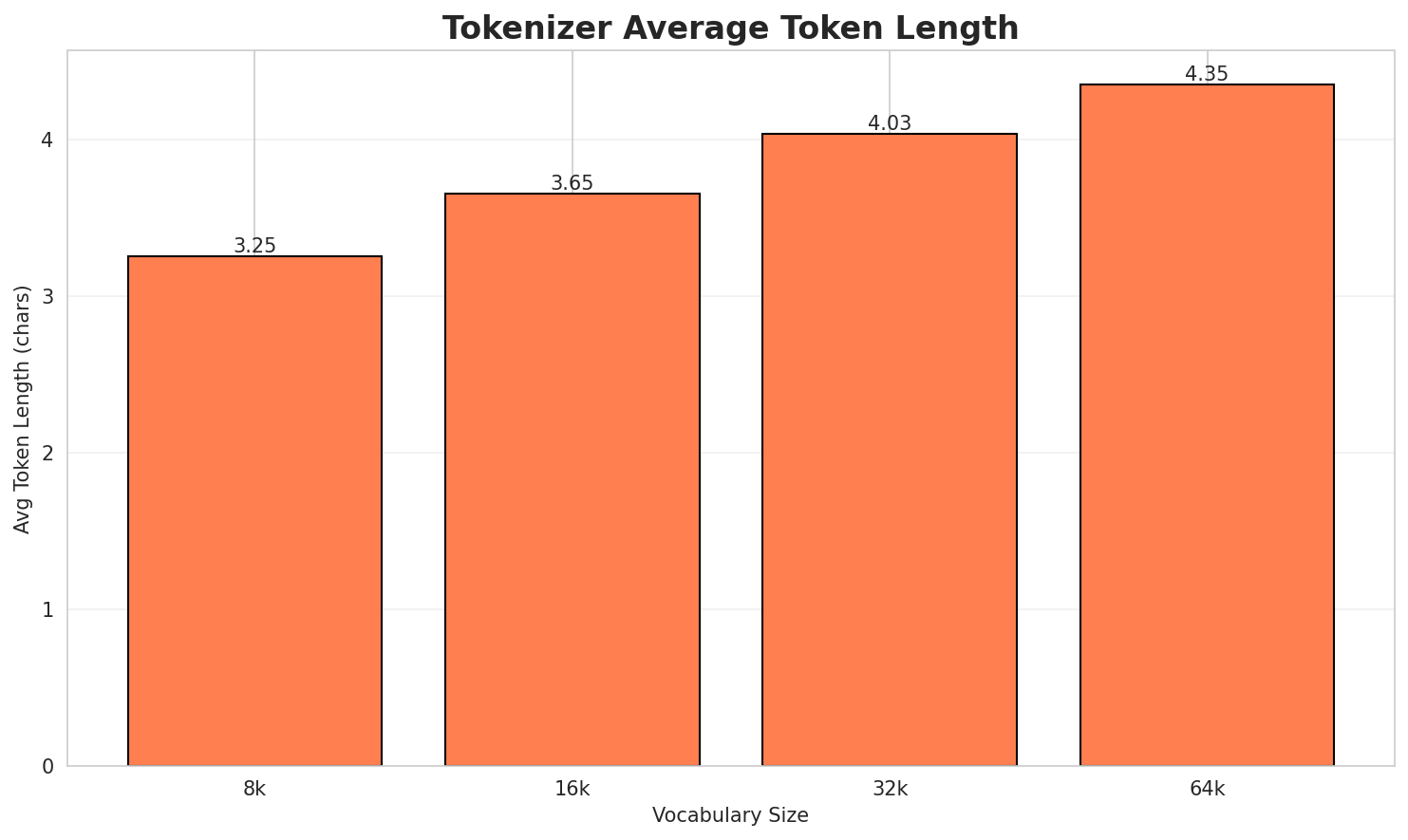

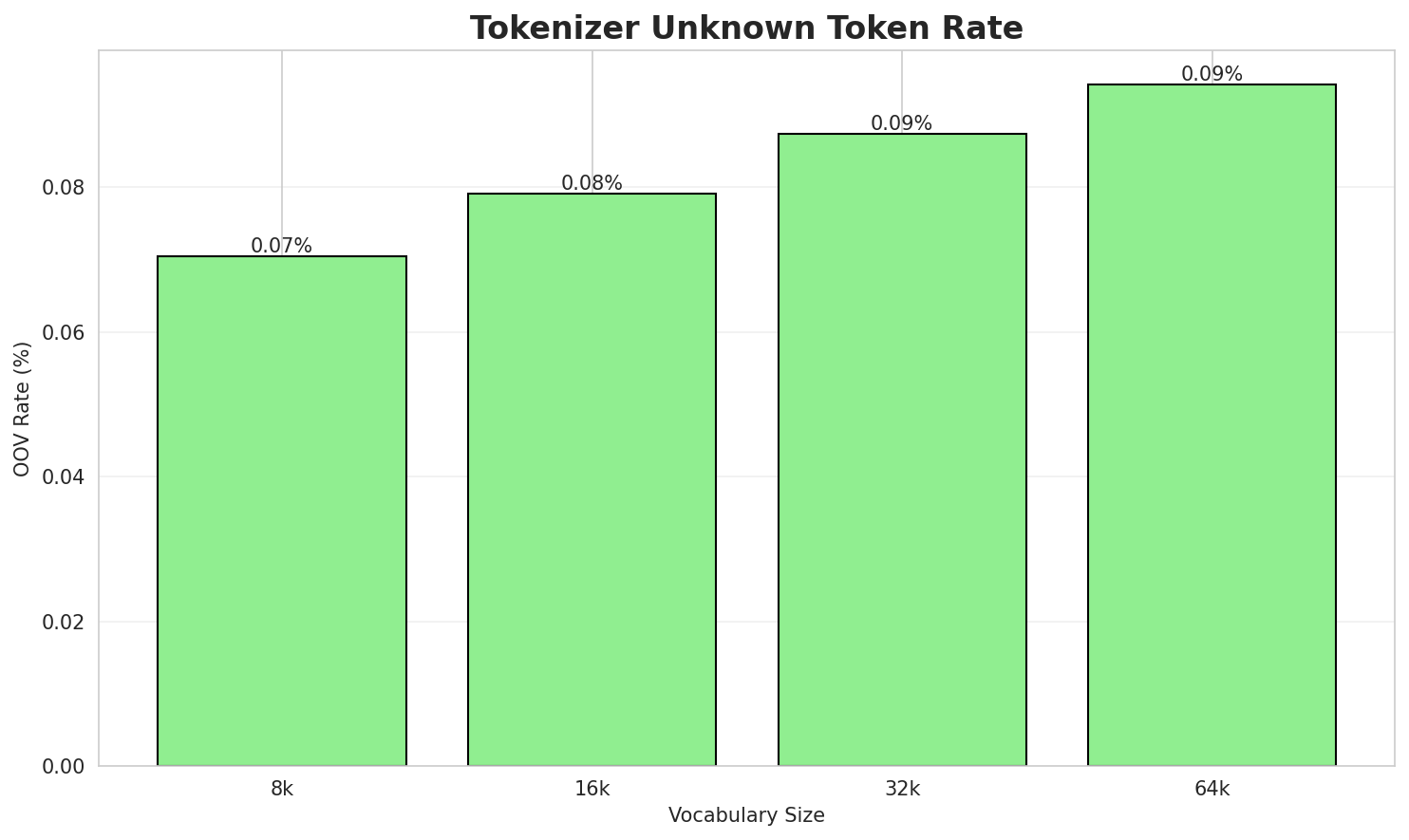

1. Tokenizer Evaluation

Results

| Vocab Size | Compression | Avg Token Len | UNK Rate | Total Tokens |

|---|---|---|---|---|

| 8k | 3.252x | 3.25 | 0.0704% | 5,499,500 |

| 16k | 3.655x | 3.65 | 0.0791% | 4,893,689 |

| 32k | 4.034x | 4.03 | 0.0873% | 4,433,903 |

| 64k | 4.347x 🏆 | 4.35 | 0.0941% | 4,114,555 |

Tokenization Examples

Below are sample sentences tokenized with each vocabulary size:

Sample 1: بيغجة خاتون هي قرية في مقاطعة شبستر، إيران. يقدر عدد سكانها بـ 635 نسمة بحسب إحص...

| Vocab | Tokens | Count |

|---|---|---|

| 8k | ▁بي غ جة ▁خ ات ون ▁هي ▁قرية ▁في ▁مقاطعة ... (+26 more) |

36 |

| 16k | ▁بي غ جة ▁خ ات ون ▁هي ▁قرية ▁في ▁مقاطعة ... (+23 more) |

33 |

| 32k | ▁بيغ جة ▁خاتون ▁هي ▁قرية ▁في ▁مقاطعة ▁شب ستر ، ... (+20 more) |

30 |

| 64k | ▁بيغ جة ▁خاتون ▁هي ▁قرية ▁في ▁مقاطعة ▁شب ستر ، ... (+20 more) |

30 |

Sample 2: IL18BP (Interleukin 18 binding protein) هوَ بروتين يُشَفر بواسطة جين IL18BP في ا...

| Vocab | Tokens | Count |

|---|---|---|

| 8k | ▁ il 1 8 b p ▁( in ter le ... (+51 more) |

61 |

| 16k | ▁il 1 8 b p ▁( in ter le uk ... (+44 more) |

54 |

| 32k | ▁il 1 8 b p ▁( inter le uk in ... (+39 more) |

49 |

| 64k | ▁il 1 8 b p ▁( inter le uk in ... (+36 more) |

46 |

Sample 3: هي مقاطعة في ولاية قشقداريا في أوزبكستان، ومركزها مدينة شهرسبز. المصادر مأهولة ف...

| Vocab | Tokens | Count |

|---|---|---|

| 8k | ▁هي ▁مقاطعة ▁في ▁ولاية ▁ق ش قد اريا ▁في ▁أوزب ... (+18 more) |

28 |

| 16k | ▁هي ▁مقاطعة ▁في ▁ولاية ▁ق ش قد اريا ▁في ▁أوزبكستان ... (+16 more) |

26 |

| 32k | ▁هي ▁مقاطعة ▁في ▁ولاية ▁قش قد اريا ▁في ▁أوزبكستان ، ... (+13 more) |

23 |

| 64k | ▁هي ▁مقاطعة ▁في ▁ولاية ▁قش قد اريا ▁في ▁أوزبكستان ، ... (+13 more) |

23 |

Key Findings

- Best Compression: 64k achieves 4.347x compression

- Lowest UNK Rate: 8k with 0.0704% unknown tokens

- Trade-off: Larger vocabularies improve compression but increase model size

- Recommendation: 32k vocabulary provides optimal balance for production use

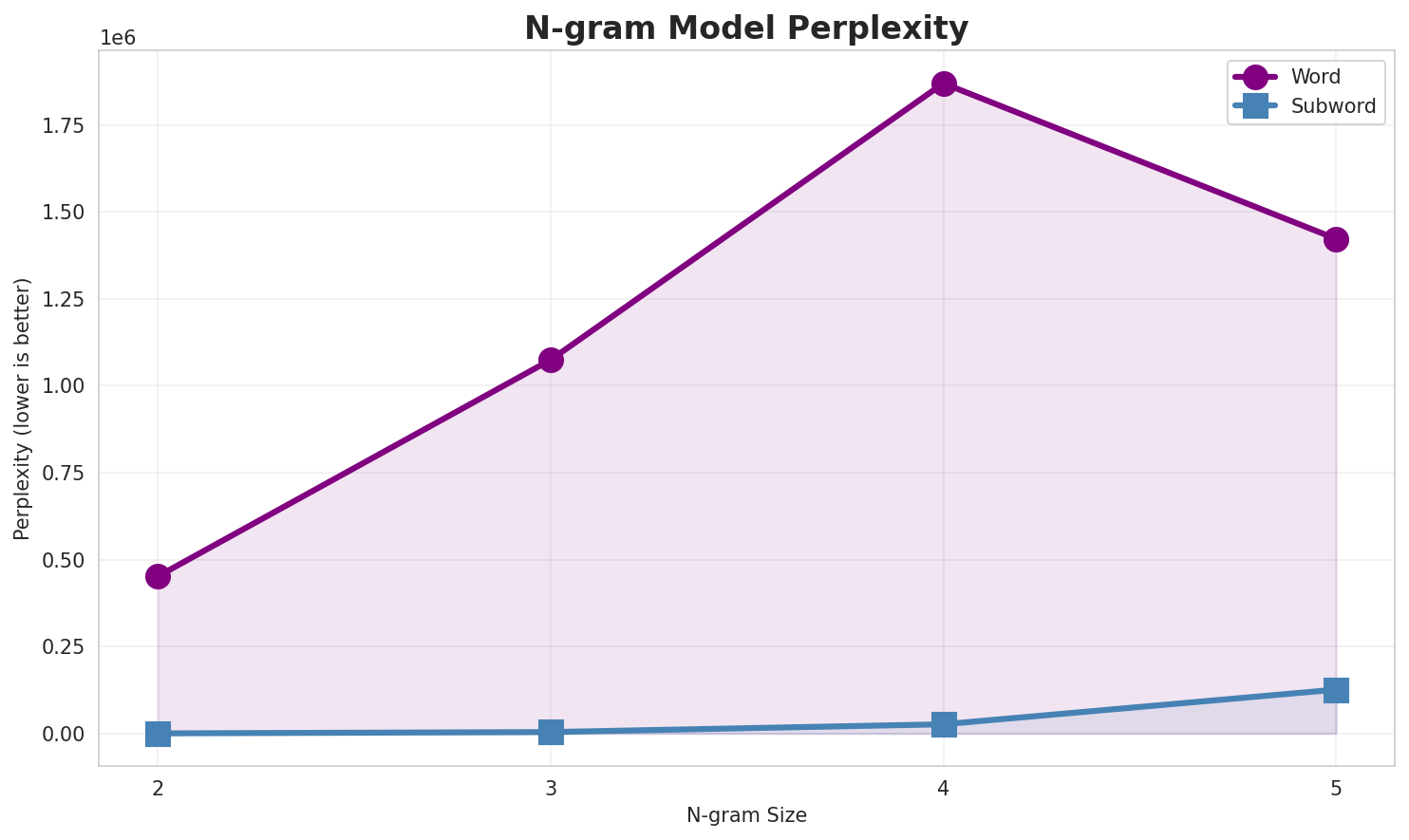

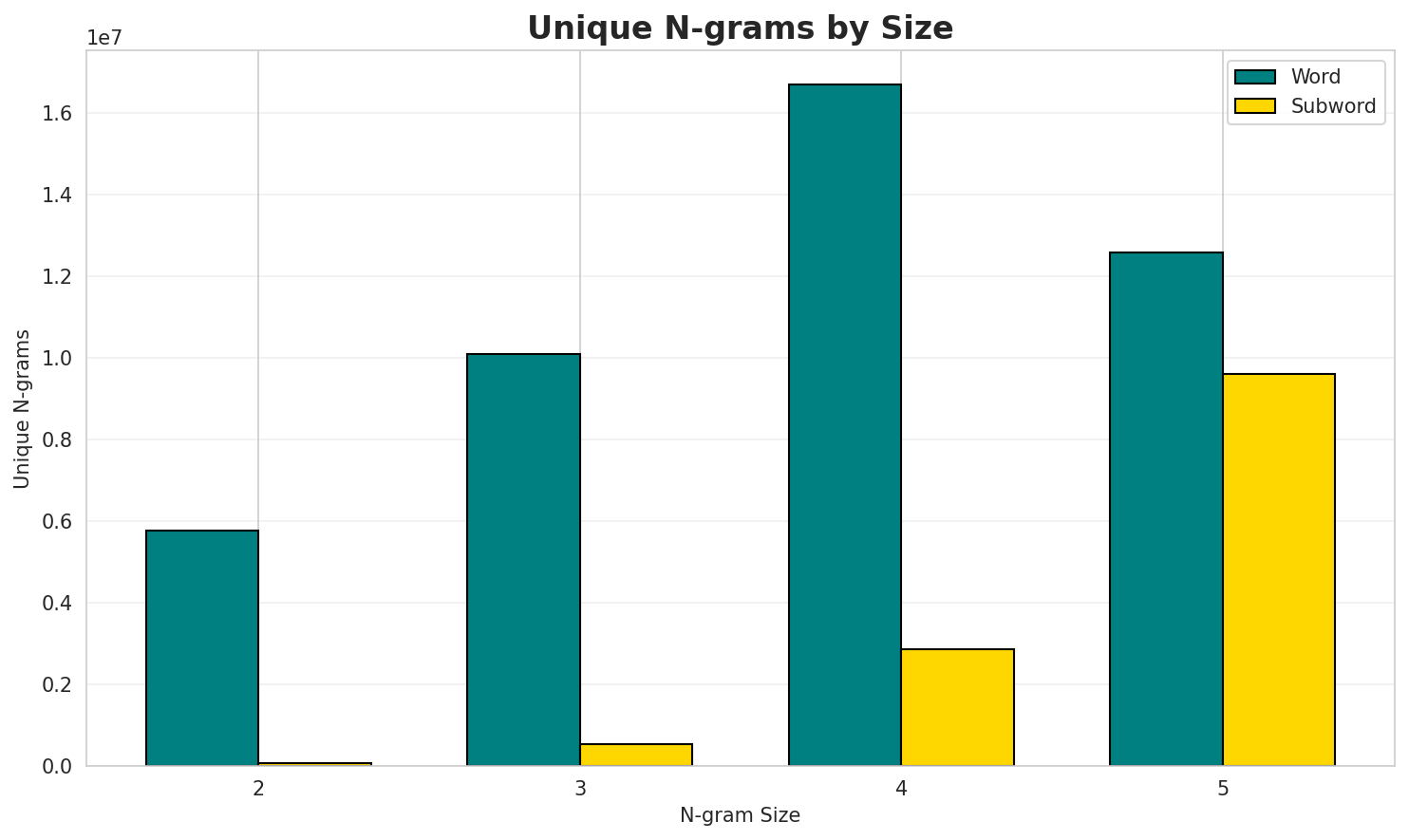

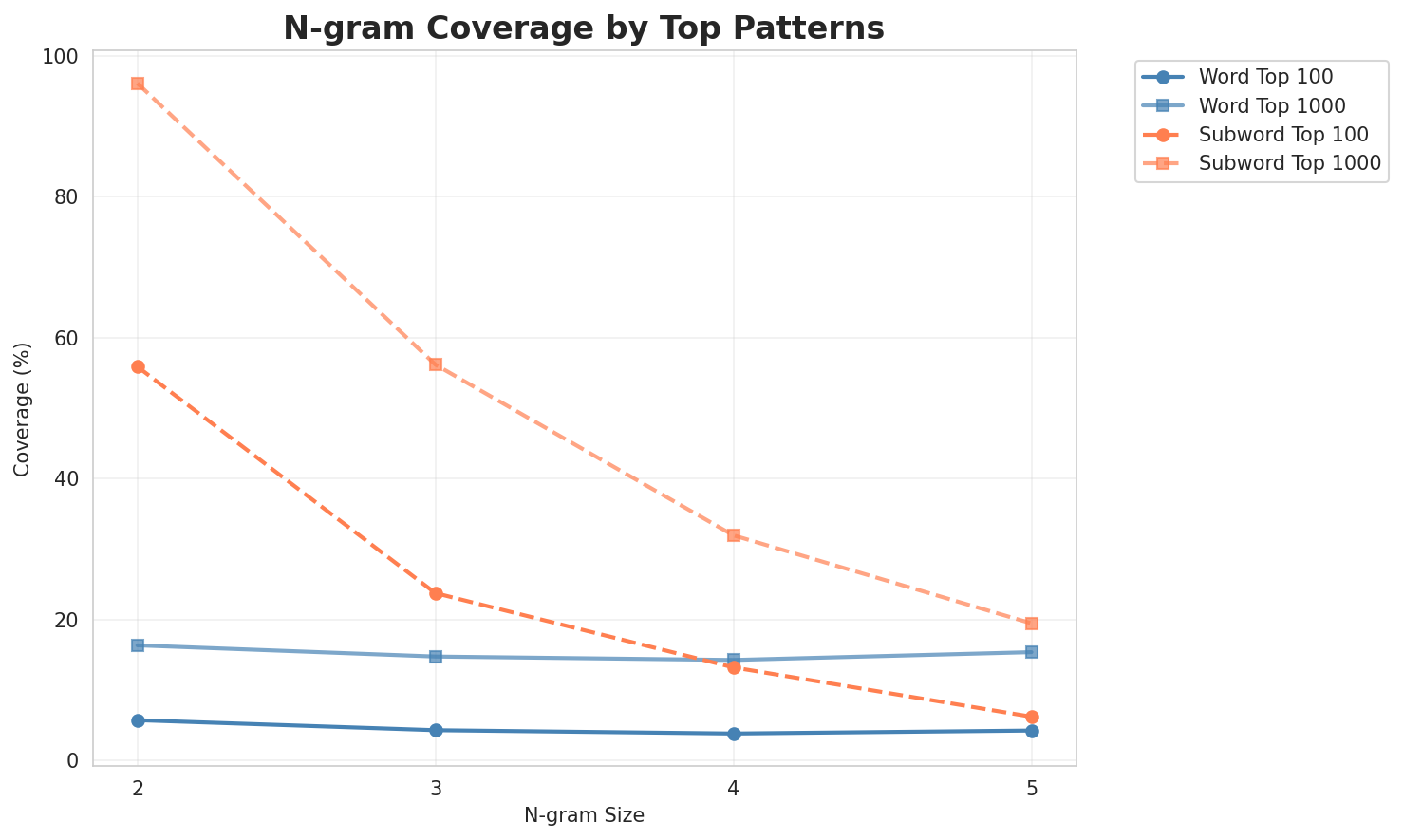

2. N-gram Model Evaluation

Results

| N-gram | Variant | Perplexity | Entropy | Unique N-grams | Top-100 Coverage | Top-1000 Coverage |

|---|---|---|---|---|---|---|

| 2-gram | Word | 452,226 | 18.79 | 5,760,373 | 5.7% | 16.3% |

| 2-gram | Subword | 436 🏆 | 8.77 | 70,700 | 55.9% | 96.1% |

| 3-gram | Word | 1,074,568 | 20.04 | 10,101,258 | 4.3% | 14.7% |

| 3-gram | Subword | 4,203 | 12.04 | 528,264 | 23.7% | 56.2% |

| 4-gram | Word | 1,869,871 | 20.83 | 16,693,684 | 3.8% | 14.3% |

| 4-gram | Subword | 26,613 | 14.70 | 2,851,427 | 13.2% | 31.9% |

| 5-gram | Word | 1,422,629 | 20.44 | 12,591,346 | 4.2% | 15.4% |

| 5-gram | Subword | 126,300 | 16.95 | 9,618,770 | 6.2% | 19.5% |

Top 5 N-grams by Size

2-grams (Word):

| Rank | N-gram | Count |

|---|---|---|

| 1 | كرة قدم |

754,062 |

| 2 | في القرن |

693,987 |

| 3 | في عام |

580,274 |

| 4 | الولايات المتحدة |

468,192 |

| 5 | وصلات خارجية |

357,388 |

3-grams (Word):

| Rank | N-gram | Count |

|---|---|---|

| 1 | في القرن 20 |

274,915 |

| 2 | مراجع وصلات خارجية |

255,117 |

| 3 | في الولايات المتحدة |

245,241 |

| 4 | في القرن 21 |

238,844 |

| 5 | أمريكيون في القرن |

166,269 |

4-grams (Word):

| Rank | N-gram | Count |

|---|---|---|

| 1 | كرة قدم مغتربون في |

94,639 |

| 2 | تحت سن الثامنة عشر |

93,897 |

| 3 | هو لاعب كرة قدم |

93,478 |

| 4 | أمريكيون في القرن 20 |

87,276 |

| 5 | في الألعاب الأولمبية الصيفية |

66,167 |

5-grams (Word):

| Rank | N-gram | Count |

|---|---|---|

| 1 | تعداد عام بلغ عدد سكان |

38,914 |

| 2 | بحسب تعداد عام وبلغ عدد |

38,787 |

| 3 | تعداد عام وبلغ عدد الأسر |

38,786 |

| 4 | نسمة بحسب تعداد عام وبلغ |

38,783 |

| 5 | في الفئة العمرية ما بين |

38,744 |

2-grams (Subword):

| Rank | N-gram | Count |

|---|---|---|

| 1 | ا ل |

88,022,277 |

| 2 | _ ا |

75,496,816 |

| 3 | ة _ |

45,404,729 |

| 4 | ي _ |

32,155,198 |

| 5 | ن _ |

31,357,117 |

3-grams (Subword):

| Rank | N-gram | Count |

|---|---|---|

| 1 | _ ا ل |

71,328,243 |

| 2 | _ ف ي |

15,404,541 |

| 3 | ف ي _ |

15,103,296 |

| 4 | ي ة _ |

14,752,185 |

| 5 | ا ل م |

13,544,149 |

4-grams (Subword):

| Rank | N-gram | Count |

|---|---|---|

| 1 | _ ف ي _ |

14,189,454 |

| 2 | ة _ ا ل |

12,269,528 |

| 3 | _ ا ل م |

11,772,138 |

| 4 | _ م ن _ |

8,237,350 |

| 5 | ي _ ا ل |

7,703,248 |

5-grams (Subword):

| Rank | N-gram | Count |

|---|---|---|

| 1 | ف ي _ ا ل |

4,810,645 |

| 2 | _ ف ي _ ا |

4,774,417 |

| 3 | ا ت _ ا ل |

3,857,996 |

| 4 | ي ة _ ا ل |

3,696,976 |

| 5 | _ ع ل ى _ |

3,259,756 |

Key Findings

- Best Perplexity: 2-gram (subword) with 436

- Entropy Trend: Decreases with larger n-grams (more predictable)

- Coverage: Top-1000 patterns cover ~19% of corpus

- Recommendation: 4-gram or 5-gram for best predictive performance

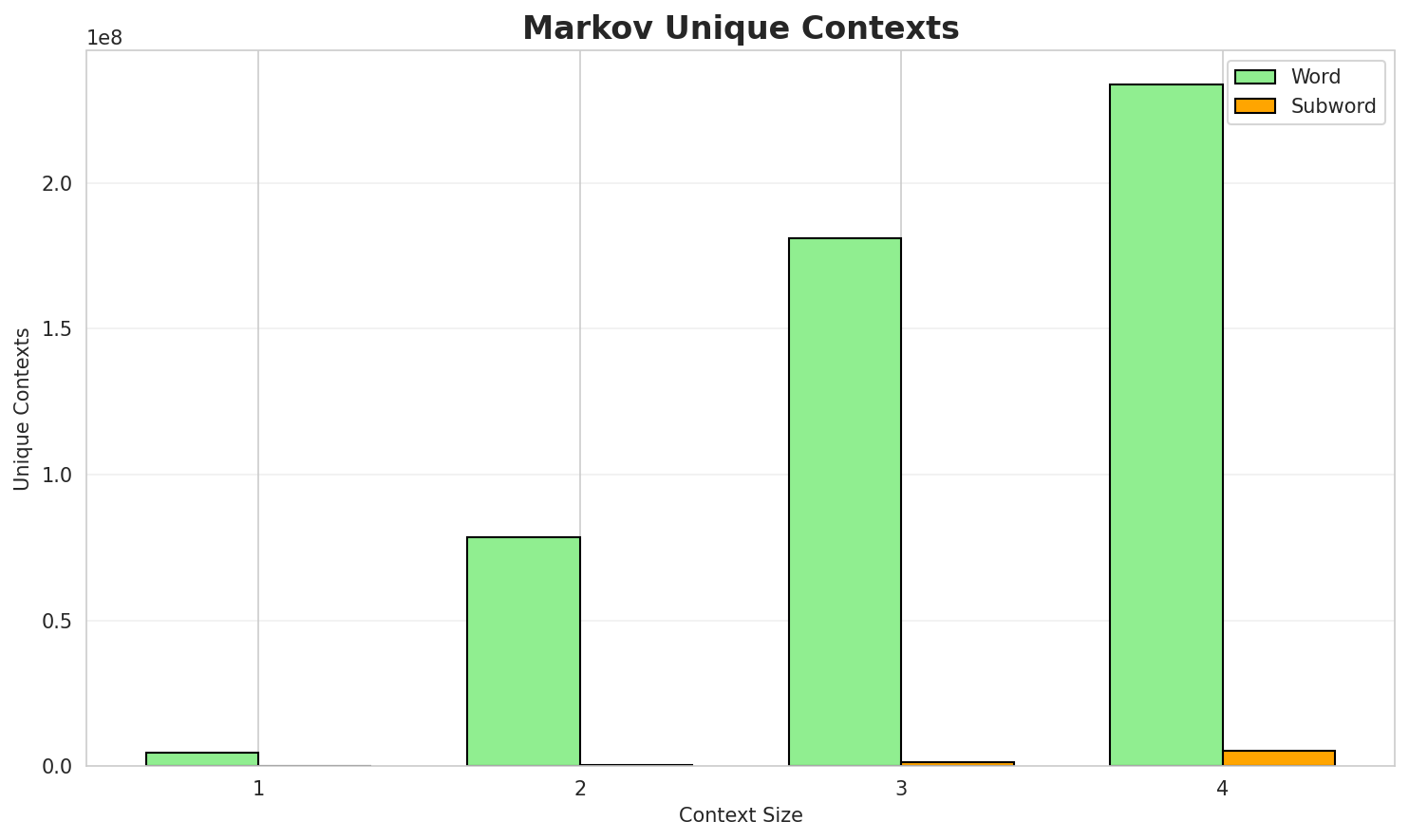

3. Markov Chain Evaluation

Results

| Context | Variant | Avg Entropy | Perplexity | Branching Factor | Unique Contexts | Predictability |

|---|---|---|---|---|---|---|

| 1 | Word | 0.9908 | 1.987 | 17.58 | 4,471,621 | 0.9% |

| 1 | Subword | 1.3702 | 2.585 | 13.33 | 18,570 | 0.0% |

| 2 | Word | 0.3659 | 1.289 | 2.31 | 78,540,786 | 63.4% |

| 2 | Subword | 0.7295 | 1.658 | 5.21 | 247,596 | 27.1% |

| 3 | Word | 0.1310 | 1.095 | 1.29 | 181,002,468 | 86.9% |

| 3 | Subword | 0.6782 | 1.600 | 4.14 | 1,290,623 | 32.2% |

| 4 | Word | 0.0499 🏆 | 1.035 | 1.09 | 233,679,791 | 95.0% |

| 4 | Subword | 0.6490 | 1.568 | 3.51 | 5,343,485 | 35.1% |

Generated Text Samples (Word-based)

Below are text samples generated from each word-based Markov chain model:

Context Size 1:

في المدائن وهي منتزه نيقولا الصايغ أميناً عاماً ونسبة 22 مايو حين سجلت في مجال تعليممن مونتريال اسمه إلى الساحل في الإصدار الرابع قبل الرابطة مع نادي ثون نادي سيون ببطولةعلى الصيد فلا يطالب بتنفيذها أو وجود منافسة ألعاب البحر في حين احتفظت بهويتها الجديدة بقيمة

Context Size 2:

كرة قدم من قصرش مقاطعة إسبان من كتالونيا إسبانيات في القرن 20 استمر التعليم التطوري أو التنمويفي القرن 11 في وقتٍ واحد غابرييلا قرنفل وقرفة ترجمة عوض أحمد بن عبد الله الأميرة منيرةفي عام أن تكلفة الوجبة البسيطة في نسج الظهارية ثخانة الجلد وتصلبه المترافقين مع المشكلات التي تنشأ

Context Size 3:

في القرن 20 أمريكيون أفارقة في القرن 21 كرة قدم رجالية أحياء دوري الدرجة الأولى الأرجنتيني فيليز سار...مراجع وصلات خارجية كرة قدم رجالية مغتربون في روسيا على أنها قوة بحرية صغيرة إلى مدينة تشهد حركةفي الولايات المتحدة مراجع وصلات خارجية تلفزيونية مصرية بدأ عرضها في كوميديا سوداء تلفزيونية بريطانية...

Context Size 4:

كرة قدم مغتربون في السلفادور كرة قدم هندوراسيون كرة قدم هندوراسيون مغتربون كوبا سينتروأمريكانا منتخب...تحت سن الثامنة عشر تعيش معهم وبلغت نسبة الأزواج القاطنين مع بعضهم البعض 46 3 من أصل المجموع الكليهو لاعب كرة قدم بريطاني في مركز لعب مع برادفورد سيتي وريث روفرز ونادي بارتيك ثيسل ونادي رينجرز ونادي

Generated Text Samples (Subword-based)

Below are text samples generated from each subword-based Markov chain model:

Context Size 1:

_فيا،_دارب_ي_أمراقصالمعب_ع_حمالملبطة_قالمندواب_ا

Context Size 2:

الأخرها_تشت_علية__الممثل_أصدققه_حاة_لدعار_الة)_جوزي

Context Size 3:

_الذين_حليلار_رُزِق__في_إحصاءات_الله)،في_الوالصحيحًا_كرة_

Context Size 4:

_في_جمهور._جسدت_ديكة_البلدي_في_اخترعه__المتحدة._يقدمه_في_

Key Findings

- Best Predictability: Context-4 (word) with 95.0% predictability

- Branching Factor: Decreases with context size (more deterministic)

- Memory Trade-off: Larger contexts require more storage (5,343,485 contexts)

- Recommendation: Context-3 or Context-4 for text generation

4. Vocabulary Analysis

Statistics

| Metric | Value |

|---|---|

| Vocabulary Size | 1,950,572 |

| Total Tokens | 322,254,287 |

| Mean Frequency | 165.21 |

| Median Frequency | 4 |

| Frequency Std Dev | 12979.56 |

Most Common Words

| Rank | Word | Frequency |

|---|---|---|

| 1 | في | 14,286,084 |

| 2 | من | 8,287,878 |

| 3 | على | 3,284,746 |

| 4 | إلى | 2,443,493 |

| 5 | عام | 1,621,280 |

| 6 | أن | 1,387,527 |

| 7 | مع | 1,153,439 |

| 8 | عن | 1,144,208 |

| 9 | أو | 1,098,905 |

| 10 | التي | 1,084,821 |

Least Common Words (from vocabulary)

| Rank | Word | Frequency |

|---|---|---|

| 1 | dekréty | 2 |

| 2 | تادينا | 2 |

| 3 | بوكسوري | 2 |

| 4 | نموذجاالأدب | 2 |

| 5 | كنونالأدب | 2 |

| 6 | وليتاز | 2 |

| 7 | حكمٌّ | 2 |

| 8 | أسديراكي | 2 |

| 9 | إنتركوليجيت | 2 |

| 10 | للفيزيولوجية | 2 |

Zipf's Law Analysis

| Metric | Value |

|---|---|

| Zipf Coefficient | 0.9488 |

| R² (Goodness of Fit) | 0.991144 |

| Adherence Quality | excellent |

Coverage Analysis

| Top N Words | Coverage |

|---|---|

| Top 100 | 23.1% |

| Top 1,000 | 45.9% |

| Top 5,000 | 66.1% |

| Top 10,000 | 74.2% |

Key Findings

- Zipf Compliance: R²=0.9911 indicates excellent adherence to Zipf's law

- High Frequency Dominance: Top 100 words cover 23.1% of corpus

- Long Tail: 1,940,572 words needed for remaining 25.8% coverage

5. Word Embeddings Evaluation

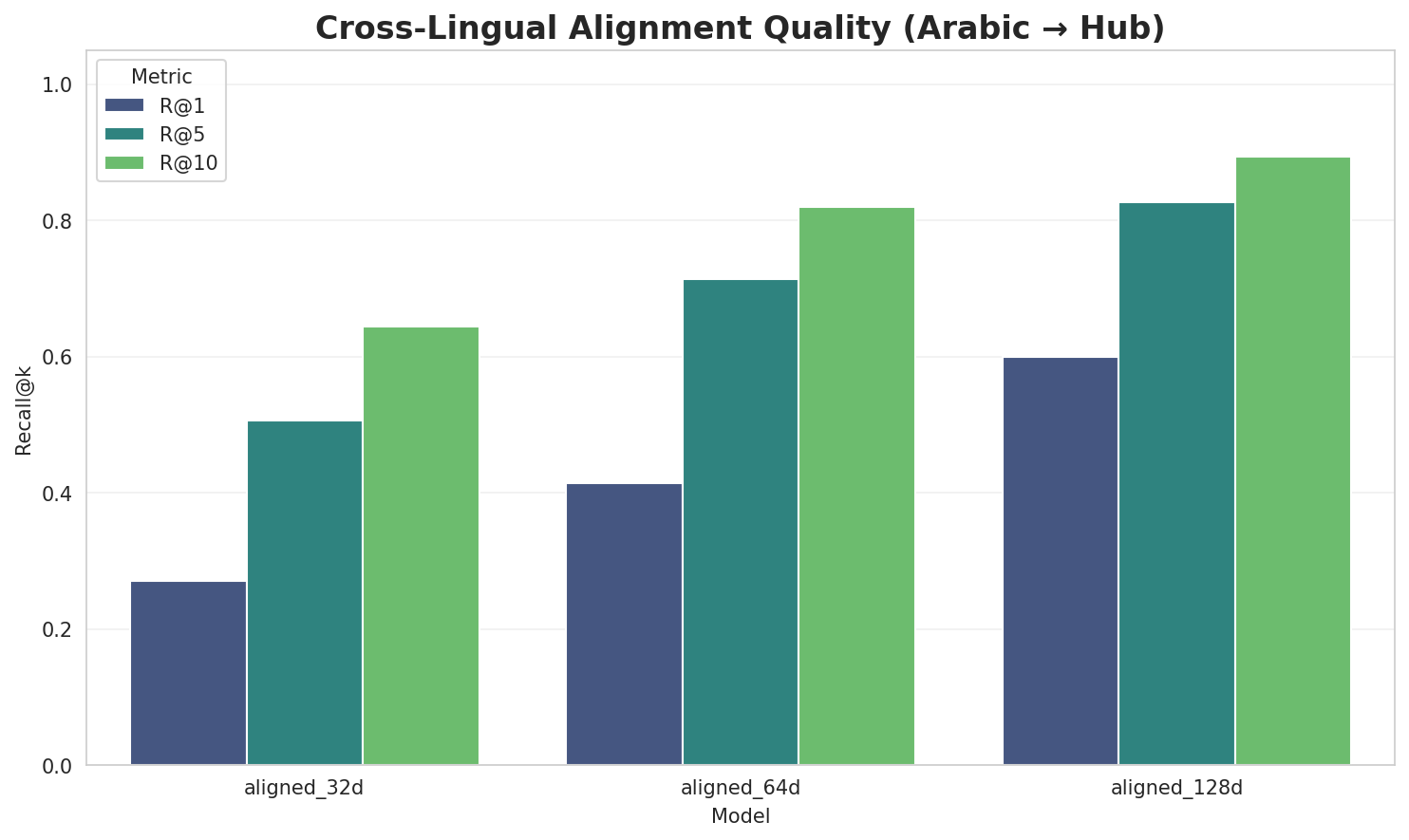

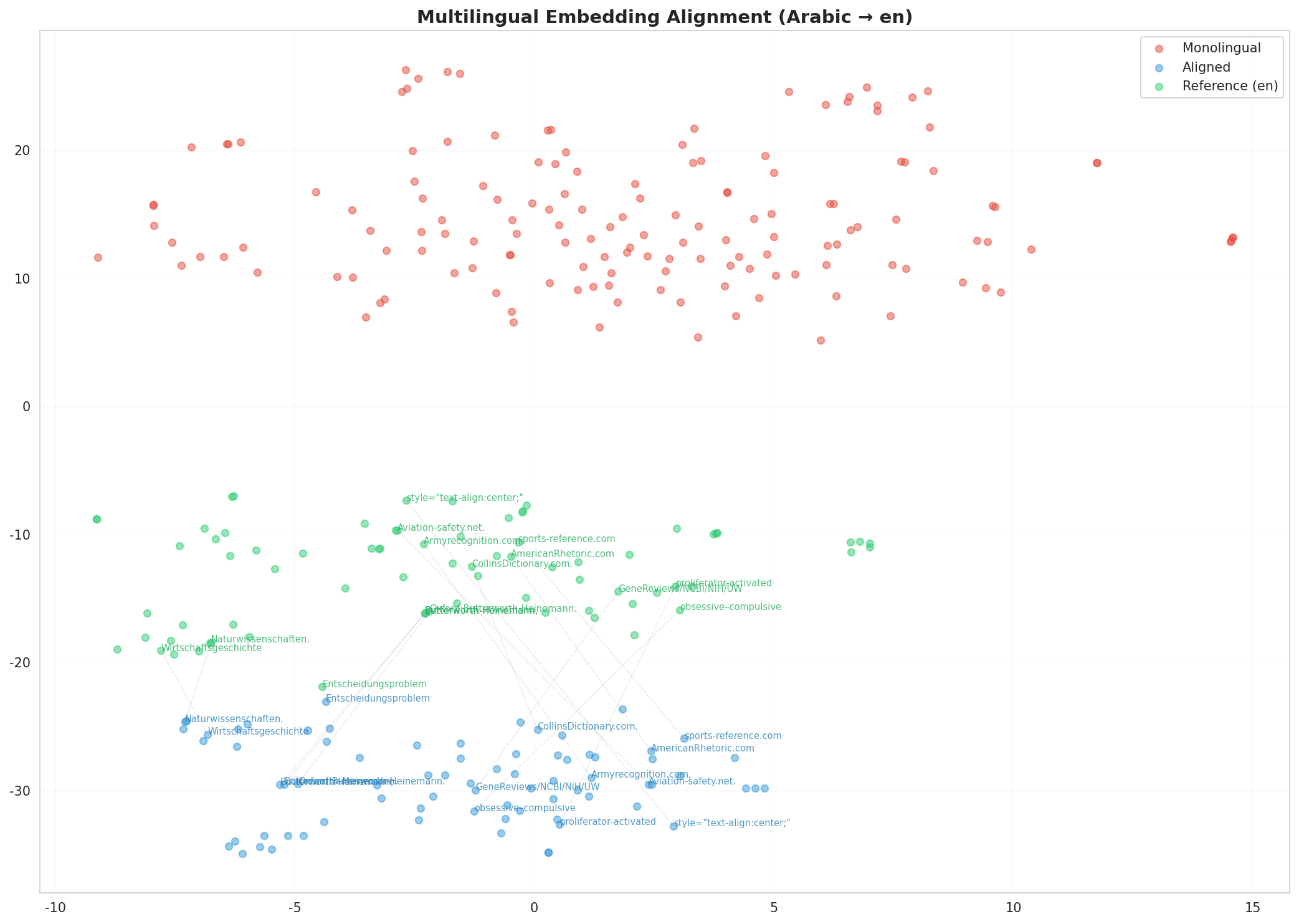

5.1 Cross-Lingual Alignment

5.2 Model Comparison

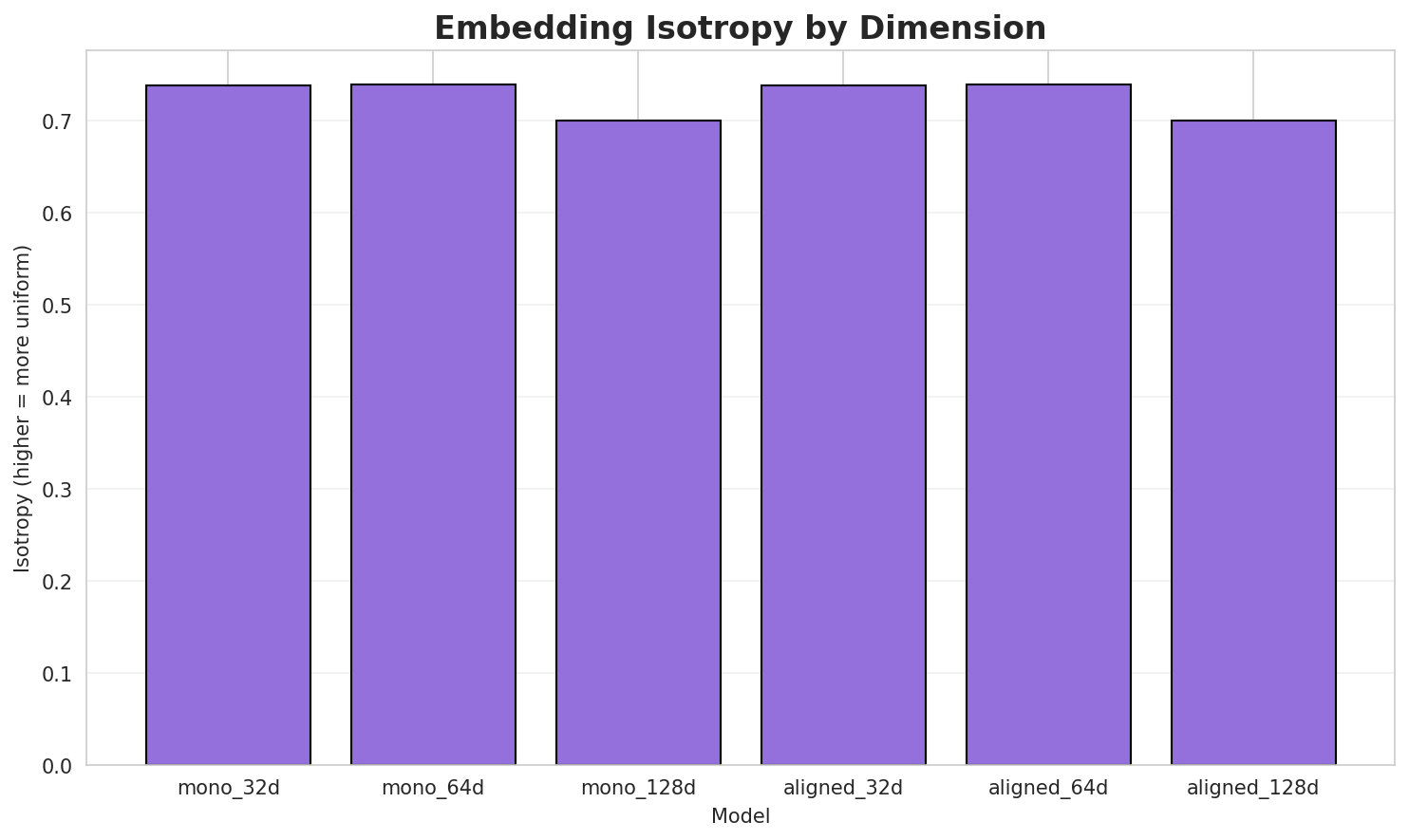

| Model | Dimension | Isotropy | Semantic Density | Alignment R@1 | Alignment R@10 |

|---|---|---|---|---|---|

| mono_32d | 32 | 0.7379 | 0.3519 | N/A | N/A |

| mono_64d | 64 | 0.7394 🏆 | 0.2816 | N/A | N/A |

| mono_128d | 128 | 0.7002 | 0.2259 | N/A | N/A |

| aligned_32d | 32 | 0.7379 | 0.3528 | 0.2700 | 0.6440 |

| aligned_64d | 64 | 0.7394 | 0.2881 | 0.4140 | 0.8200 |

| aligned_128d | 128 | 0.7002 | 0.2283 | 0.6000 | 0.8940 |

Key Findings

- Best Isotropy: mono_64d with 0.7394 (more uniform distribution)

- Semantic Density: Average pairwise similarity of 0.2881. Lower values indicate better semantic separation.

- Alignment Quality: Aligned models achieve up to 60.0% R@1 in cross-lingual retrieval.

- Recommendation: 128d aligned for best cross-lingual performance

6. Morphological Analysis (Experimental)

This section presents an automated morphological analysis derived from the statistical divergence between word-level and subword-level models. By analyzing where subword predictability spikes and where word-level coverage fails, we can infer linguistic structures without supervised data.

6.1 Productivity & Complexity

| Metric | Value | Interpretation | Recommendation |

|---|---|---|---|

| Productivity Index | 5.000 | High morphological productivity | Reliable analysis |

| Idiomaticity Gap | -0.210 | Low formulaic content | - |

6.2 Affix Inventory (Productive Units)

These are the most productive prefixes and suffixes identified by sampling the vocabulary for global substitutability patterns. A unit is considered an affix if stripping it leaves a valid stem that appears in other contexts.

Productive Prefixes

| Prefix | Examples |

|---|---|

-ال |

الألمانينصف, الاعتياديّ, الباكترية |

-وا |

والشجرية, والكاحِل, والميلانين |

-وال |

والشجرية, والكاحِل, والميلانين |

-الم |

المُحاضرة, المورينو, الممنوعة |

Productive Suffixes

| Suffix | Examples |

|---|---|

-ين |

ضوئيتين, بقلبين, نحوين |

-ات |

وخصوصيات, نانديات, دويركات |

-ية |

والشجرية, الباكترية, الّدودية |

-ها |

هاماريتيها, اختها, أُصولها |

6.3 Bound Stems (Lexical Roots)

Bound stems are high-frequency subword units that are semantically cohesive but rarely appear as standalone words. These often correspond to the 'core' of a word that requires inflection or derivation to be valid.

| Stem | Cohesion | Substitutability | Examples |

|---|---|---|---|

تخدا |

2.86x | 173 contexts | متخدا, كتخدا, متخداً |

ستخد |

2.18x | 623 contexts | مستخد, استخد, تستخد |

ألعا |

2.68x | 82 contexts | ألعاد, ألعاب, ألعالم |

والع |

1.74x | 629 contexts | والعز, والعي, والعى |

اطعة |

3.13x | 28 contexts | قاطعة, ساطعة, ساطعةً |

التع |

1.63x | 578 contexts | التعة, التعس, التعب |

رنسي |

1.82x | 179 contexts | درنسي, رنسيس, فرنسي |

استخ |

1.79x | 192 contexts | استخم, استخد, استخر |

ريطا |

2.08x | 85 contexts | غريطا, شريطا, وشريطا |

لمنا |

1.37x | 729 contexts | تلمنا, ظلمنا, ألمنا |

غترب |

2.44x | 39 contexts | اغترب, مغترب, يغترب |

الحا |

1.34x | 693 contexts | الحاء, مالحا, الحاص |

6.4 Affix Compatibility (Co-occurrence)

This table shows which prefixes and suffixes most frequently co-occur on the same stems, revealing the 'stacking' rules of the language's morphology.

| Prefix | Suffix | Frequency | Examples |

|---|---|---|---|

-ال |

-ية |

95 words | الائتمانية, الويبرية |

-ال |

-ات |

76 words | الهباءات, الكوميديات |

-ال |

-ين |

68 words | البحـرين, المتوارثين |

-وا |

-ية |

35 words | والعضدية, والهانرية |

-وا |

-ات |

24 words | والمطرزات, والسلوريات |

-وا |

-ين |

17 words | والمُغنين, والميكرونيزيين |

-وا |

-ها |

4 words | واعترضتها, واستبعدتها |

6.5 Recursive Morpheme Segmentation

Using Recursive Hierarchical Substitutability, we decompose complex words into their constituent morphemes. This approach handles nested affixes (e.g., prefix-prefix-root-suffix).

| Word | Suggested Split | Confidence | Stem |

|---|---|---|---|

| البروتينين | ال-بروت-ين-ين |

7.5 | بروت |

| والكاظمية | وال-كاظم-ية |

6.0 | كاظم |

| والسرورية | وال-سرور-ية |

6.0 | سرور |

| الغيلوغية | ال-غيلوغ-ية |

6.0 | غيلوغ |

| والحطابين | وال-حطاب-ين |

6.0 | حطاب |

| والمقدسيين | وال-مقدسي-ين |

6.0 | مقدسي |

| والنجومية | وال-نجوم-ية |

6.0 | نجوم |

| والرباعيات | وال-رباعي-ات |

6.0 | رباعي |

| الكلابشات | ال-كلابش-ات |

6.0 | كلابش |

| السبعينات | ال-سبعين-ات |

6.0 | سبعين |

| لاحتجاجاتها | لاحتجاج-ات-ها |

6.0 | لاحتجاج |

| والمكسّرات | وال-مكسّر-ات |

6.0 | مكسّر |

| والسكيريين | وال-سكيري-ين |

6.0 | سكيري |

| إسقاطاتها | إسقاط-ات-ها |

6.0 | إسقاط |

| واستثمارها | وا-ستثمار-ها |

6.0 | ستثمار |

6.6 Linguistic Interpretation

Automated Insight: The language Arabic shows high morphological productivity. The subword models are significantly more efficient than word models, suggesting a rich system of affixation or compounding.

7. Summary & Recommendations

Production Recommendations

| Component | Recommended | Rationale |

|---|---|---|

| Tokenizer | 64k BPE | Best compression (4.35x) |

| N-gram | 2-gram | Lowest perplexity (436) |

| Markov | Context-4 | Highest predictability (95.0%) |

| Embeddings | 100d | Balanced semantic capture and isotropy |

Appendix: Metrics Glossary & Interpretation Guide

This section provides definitions, intuitions, and guidance for interpreting the metrics used throughout this report.

Tokenizer Metrics

Compression Ratio

Definition: The ratio of characters to tokens (chars/token). Measures how efficiently the tokenizer represents text.

Intuition: Higher compression means fewer tokens needed to represent the same text, reducing sequence lengths for downstream models. A 3x compression means ~3 characters per token on average.

What to seek: Higher is generally better for efficiency, but extremely high compression may indicate overly aggressive merging that loses morphological information.

Average Token Length (Fertility)

Definition: Mean number of characters per token produced by the tokenizer.

Intuition: Reflects the granularity of tokenization. Longer tokens capture more context but may struggle with rare words; shorter tokens are more flexible but increase sequence length.

What to seek: Balance between 2-5 characters for most languages. Arabic/morphologically-rich languages may benefit from slightly longer tokens.

Unknown Token Rate (OOV Rate)

Definition: Percentage of tokens that map to the unknown/UNK token, indicating words the tokenizer cannot represent.

Intuition: Lower OOV means better vocabulary coverage. High OOV indicates the tokenizer encounters many unseen character sequences.

What to seek: Below 1% is excellent; below 5% is acceptable. BPE tokenizers typically achieve very low OOV due to subword fallback.

N-gram Model Metrics

Perplexity

Definition: Measures how "surprised" the model is by test data. Mathematically: 2^(cross-entropy). Lower values indicate better prediction.

Intuition: If perplexity is 100, the model is as uncertain as if choosing uniformly among 100 options at each step. A perplexity of 10 means effectively choosing among 10 equally likely options.

What to seek: Lower is better. Perplexity decreases with larger n-grams (more context). Values vary widely by language and corpus size.

Entropy

Definition: Average information content (in bits) needed to encode the next token given the context. Related to perplexity: perplexity = 2^entropy.

Intuition: High entropy means high uncertainty/randomness; low entropy means predictable patterns. Natural language typically has entropy between 1-4 bits per character.

What to seek: Lower entropy indicates more predictable text patterns. Entropy should decrease as n-gram size increases.

Coverage (Top-K)

Definition: Percentage of corpus occurrences explained by the top K most frequent n-grams.

Intuition: High coverage with few patterns indicates repetitive/formulaic text; low coverage suggests diverse vocabulary usage.

What to seek: Depends on use case. For language modeling, moderate coverage (40-60% with top-1000) is typical for natural text.

Markov Chain Metrics

Average Entropy

Definition: Mean entropy across all contexts, measuring average uncertainty in next-word prediction.

Intuition: Lower entropy means the model is more confident about what comes next. Context-1 has high entropy (many possible next words); Context-4 has low entropy (few likely continuations).

What to seek: Decreasing entropy with larger context sizes. Very low entropy (<0.1) indicates highly deterministic transitions.

Branching Factor

Definition: Average number of unique next tokens observed for each context.

Intuition: High branching = many possible continuations (flexible but uncertain); low branching = few options (predictable but potentially repetitive).

What to seek: Branching factor should decrease with context size. Values near 1.0 indicate nearly deterministic chains.

Predictability

Definition: Derived metric: (1 - normalized_entropy) × 100%. Indicates how deterministic the model's predictions are.

Intuition: 100% predictability means the next word is always certain; 0% means completely random. Real text falls between these extremes.

What to seek: Higher predictability for text generation quality, but too high (>98%) may produce repetitive output.

Vocabulary & Zipf's Law Metrics

Zipf's Coefficient

Definition: The slope of the log-log plot of word frequency vs. rank. Zipf's law predicts this should be approximately -1.

Intuition: A coefficient near -1 indicates the corpus follows natural language patterns where a few words are very common and most words are rare.

What to seek: Values between -0.8 and -1.2 indicate healthy natural language distribution. Deviations may suggest domain-specific or artificial text.

R² (Coefficient of Determination)

Definition: Measures how well the linear fit explains the frequency-rank relationship. Ranges from 0 to 1.

Intuition: R² near 1.0 means the data closely follows Zipf's law; lower values indicate deviation from expected word frequency patterns.

What to seek: R² > 0.95 is excellent; > 0.99 indicates near-perfect Zipf adherence typical of large natural corpora.

Vocabulary Coverage

Definition: Cumulative percentage of corpus tokens accounted for by the top N words.

Intuition: Shows how concentrated word usage is. If top-100 words cover 50% of text, the corpus relies heavily on common words.

What to seek: Top-100 covering 30-50% is typical. Higher coverage indicates more repetitive text; lower suggests richer vocabulary.

Word Embedding Metrics

Isotropy

Definition: Measures how uniformly distributed vectors are in the embedding space. Computed as the ratio of minimum to maximum singular values.

Intuition: High isotropy (near 1.0) means vectors spread evenly in all directions; low isotropy means vectors cluster in certain directions, reducing expressiveness.

What to seek: Higher isotropy generally indicates better-quality embeddings. Values > 0.1 are reasonable; > 0.3 is good. Lower-dimensional embeddings tend to have higher isotropy.

Average Norm

Definition: Mean magnitude (L2 norm) of word vectors in the embedding space.

Intuition: Indicates the typical "length" of vectors. Consistent norms suggest stable training; high variance may indicate some words are undertrained.

What to seek: Relatively consistent norms across models. The absolute value matters less than consistency (low std deviation).

Cosine Similarity

Definition: Measures angular similarity between vectors, ranging from -1 (opposite) to 1 (identical direction).

Intuition: Words with similar meanings should have high cosine similarity. This is the standard metric for semantic relatedness in embeddings.

What to seek: Semantically related words should score > 0.5; unrelated words should be near 0. Synonyms often score > 0.7.

t-SNE Visualization

Definition: t-Distributed Stochastic Neighbor Embedding - a dimensionality reduction technique that preserves local structure for visualization.

Intuition: Clusters in t-SNE plots indicate groups of semantically related words. Spread indicates vocabulary diversity; tight clusters suggest semantic coherence.

What to seek: Meaningful clusters (e.g., numbers together, verbs together). Avoid over-interpreting distances - t-SNE preserves local, not global, structure.

General Interpretation Guidelines

- Compare within model families: Metrics are most meaningful when comparing models of the same type (e.g., 8k vs 64k tokenizer).

- Consider trade-offs: Better performance on one metric often comes at the cost of another (e.g., compression vs. OOV rate).

- Context matters: Optimal values depend on downstream tasks. Text generation may prioritize different metrics than classification.

- Corpus influence: All metrics are influenced by corpus characteristics. Wikipedia text differs from social media or literature.

- Language-specific patterns: Morphologically rich languages (like Arabic) may show different optimal ranges than analytic languages.

Visualizations Index

| Visualization | Description |

|---|---|

| Tokenizer Compression | Compression ratios by vocabulary size |

| Tokenizer Fertility | Average token length by vocabulary |

| Tokenizer OOV | Unknown token rates |

| Tokenizer Total Tokens | Total tokens by vocabulary |

| N-gram Perplexity | Perplexity by n-gram size |

| N-gram Entropy | Entropy by n-gram size |

| N-gram Coverage | Top pattern coverage |

| N-gram Unique | Unique n-gram counts |

| Markov Entropy | Entropy by context size |

| Markov Branching | Branching factor by context |

| Markov Contexts | Unique context counts |

| Zipf's Law | Frequency-rank distribution with fit |

| Vocab Frequency | Word frequency distribution |

| Top 20 Words | Most frequent words |

| Vocab Coverage | Cumulative coverage curve |

| Embedding Isotropy | Vector space uniformity |

| Embedding Norms | Vector magnitude distribution |

| Embedding Similarity | Word similarity heatmap |

| Nearest Neighbors | Similar words for key terms |

| t-SNE Words | 2D word embedding visualization |

| t-SNE Sentences | 2D sentence embedding visualization |

| Position Encoding | Encoding method comparison |

| Model Sizes | Storage requirements |

| Performance Dashboard | Comprehensive performance overview |

About This Project

Data Source

Models trained on wikipedia-monthly - a monthly snapshot of Wikipedia articles across 300+ languages.

Project

A project by Wikilangs - Open-source NLP models for every Wikipedia language.

Maintainer

Citation

If you use these models in your research, please cite:

@misc{wikilangs2025,

author = {Kamali, Omar},

title = {Wikilangs: Open NLP Models for Wikipedia Languages},

year = {2025},

doi = {10.5281/zenodo.18073153},

publisher = {Zenodo},

url = {https://huggingface.co/wikilangs}

institution = {Omneity Labs}

}

License

MIT License - Free for academic and commercial use.

Links

- 🌐 Website: wikilangs.org

- 🤗 Models: huggingface.co/wikilangs

- 📊 Data: wikipedia-monthly

- 👤 Author: Omar Kamali

- 🤝 Sponsor: Featherless AI

Generated by Wikilangs Models Pipeline

Report Date: 2026-01-07 13:14:53